Having played around with the managed Kubernetes offerings of various cloud players (DO, AWS, GCP), I was wondering if it was possible to do this cheap. My site doesn’t have much traffic or anything complicated really, so running it off a $5 DO droplet is reasonable. Sadly managed Kubernetes offerings won’t come out so cheap. (Sure I could leech off the starting $300 GCP credit for a year then keep hopping accounts, but…)

Then I read about k3s. The people behind Rancher made it as a lightweight (but functionally complete) Kubernetes distro. Lightweight, they say… Just how light? (Imagine a weird maniac light in my eyes here.) Could I run it on a $5 droplet?

Long story short

Yes.

Chapter 1: the groundwork

Getting an $5 droplet is easy. Just sign up for Digital Ocean and then create one (that link’s referral will get you some free credit to get started with). I first tried to use CoreOS, but I couldn’t get it working (kept running into permission problems), so I ended up going with Ubuntu. Installing k3s is easy too – just pipe curl into sh!

That was the easy part though. There are a few bits that managed Kubernetes provides that have to be done manually. Three I had some fun with were persistent volumes, ingress controllers and memory pressure. The first two are magically handled in cloud providers, while the memory issues are because of the tiny node.

Chapter 2: persistent volumes

Dynamically provisioning persistent volumes was pretty much out of question. I didn’t want to provide all the (likely significant amount of) glue that managed k8s does and also also pay for the extra storage. The $5 droplet has 25 gigs of disk space, and I figured that should be more than enough. Sure is.

Luckily for me, there is a local static provisioner available. I set it up following the getting started guide and it worked without problems. One thing I had to figure out was how to “mount” folders: the answer is bind mounting.

One catch with this method is that the mounts have to be the same path as where they are mounted in containers. So if a container tries to mount a volume at for example /usr/local/foo, then that path has to be a bind mount on the host too. This gave me some headache trying to deal with images that wouldn’t let me customize their volume mounts (mostly Bitnami Helm charts).

In the end I created two new storage classes, one for mounts under /mnt (for nice images where I could customize the mount paths) and one for those under /bitnami (guess). This means that containers that request the first storage class would have mount paths like /mnt/mounted-folder. Those folders have to be prepared beforehand and bind mounted.

apiVersion: v1

kind: ConfigMap

metadata:

name: local-provisioner-config

namespace: default

labels:

heritage: "Tiller"

release: "release-name"

chart: provisioner-2.3.2

data:

storageClassMap: |

nice-disks:

hostDir: /mnt

mountDir: /mnt

blockCleanerCommand:

- "/scripts/shred.sh"

- "2"

volumeMode: Filesystem

fsType: ext4

bitnami-disks:

hostDir: /bitnami

mountDir: /bitnami

blockCleanerCommand:

- "/scripts/shred.sh"

- "2"

volumeMode: Filesystem

fsType: ext4I have a /storage folder that contains the data directories. When I add a new service, I create a subfolder there (and in the folder of the storage class) and add a new line to /etc/fstab. At first I was mounting them manually with mount --bind, but this proved unreliable and a pain in the ass. The fstab lines are like /storage/source-folder /mnt/mount-path none bind.

Then specifying one of those storage classes when adding new services makes it work. But since I just kept punching the template until it worked (and never touch it again), I ended up with the provisioner running in the default namespace. I’m not sure if running it in kube-system would break anything and at this point I’m not willing to risk it.

Chapter 3: networking and domain certificates

I feel really bad about how long this took me to get working. I didn’t realize that k3s came with Traefik installed, so I kept trying to set up an ingress controller. Which then of course ended up clashing with Traefik and nothing worked. I even tried setting up metallb to no avail.

Actually all this takes is adding the ingress class annotation kubernetes.io/ingress.class: "traefik" so Traefik knows it should deal with the ingress and the rest is magic.

One thing I was jealous at the Digital Ocean load balancer for was how it could handle Let’s Encrypt certificates automagically. Considering how much effort it can be to deal with manually, I really wanted to automate it. Luckily there is a way.

The way is called cert-manager (because naming things is hard). Their documentation can be tricky to follow at times, which is I suppose because of API updates not getting reflected in the docs.

Installing cert-manager is simple even without Helm. This is my Issuer setup to run on DO. I set up the API token from the control panel. I use DNS verification, because I want to use wildcard certificates.

apiVersion: v1

kind: Secret

metadata:

name: digitalocean-dns

namespace: cert-manager

type: Generic

stringData:

access-token: <api-token-here>

---

apiVersion: certmanager.k8s.io/v1alpha1

kind: Issuer

metadata:

name: letsencrypt-k3s-issuer

namespace: cert-manager

spec:

acme:

email: <email-address-here>

server: https://acme-v02.api.letsencrypt.org/directory

privateKeySecretRef:

name: letsencrypt-k3s-issuer-secret

dns01:

providers:

- name: digitalocean

digitalocean:

tokenSecretRef:

name: digitalocean-dns

key: access-tokenWith this, new certificates are trivial to issue, and the rest is all handled by cert-manager. For example here’s my setup for valerauko.net.

apiVersion: certmanager.k8s.io/v1alpha1

kind: Certificate

metadata:

name: valerauko-net-cert

namespace: default

spec:

secretName: valerauko-net-tls

issuerRef:

name: letsencrypt-k3s-issuer

commonName: '*.valerauko.net'

dnsNames:

- valerauko.net

acme:

config:

- dns01:

provider: digitalocean

domains:

- '*.valerauko.net'

- valerauko.netThen you can enable TLS for domains by adding an option like the one below to the Ingress spec. As smooth as it gets, if you ask me.

tls:

- hosts:

- valerauko.net

secretName: valerauko-net-tls

Chapter 3: memory and the like

With storage and network issues cleared, I started putting stuff into my cluster. I very soon noticed that some bigger installs (like MongoDB for example) can make the whole node unresponsive, which isn’t exactly ideal. I’ll later go into details about how I set up stuff like WordPress to run on k3s.

Looking at top, it was clear that memory pressure is a much bigger problem than CPU usage. Then I noticed that there is no swap! No surprise it didn’t like running so many things. I set up a 2 gig swapfile and haven’t seen any performance issues since.

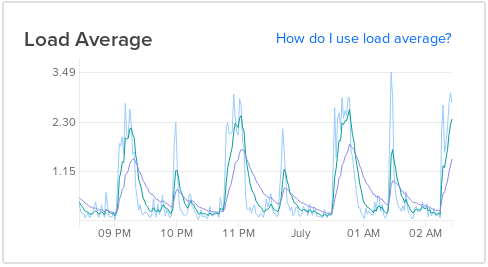

I mean, I kinda see them exist, but I don’t know what’s happening. There are regular load spikes on the node. Since there is no change in any other metrics (at least not that I can see), I’m ignoring them for now, but if anyone knows what could be causing them, I’d be interested to learn. They happen at regular intervals, every hour. Bigger spikes on odd hours, smaller ones on even hours.