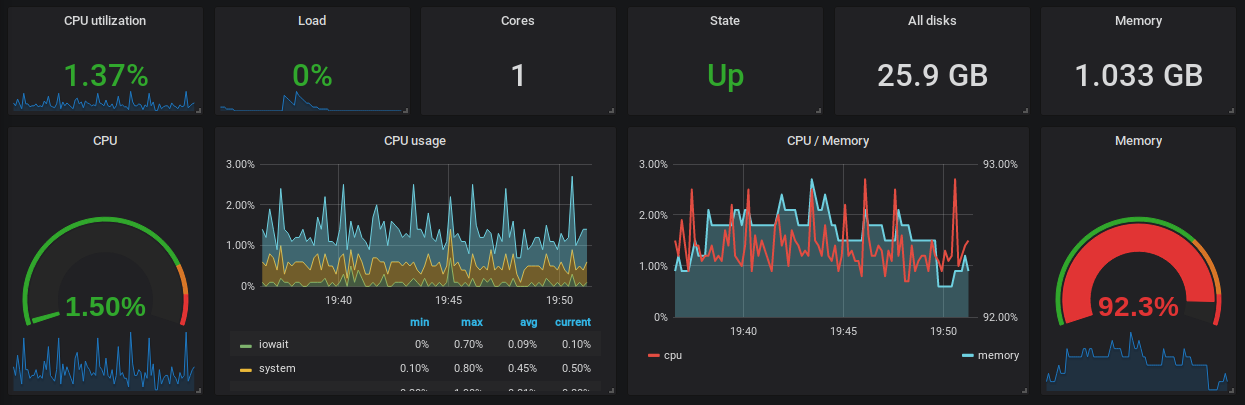

Earlier I wrote about how I set up Beats – Elasticsearch – Grafana to visualize the various metrics (and logs, hate me) from Kitsune‘s dev server. There were a few tricky spots that didn’t work at first and took a while to figure out (or at least get working).

The silent network

First was that on the system metrics dashboard I set up, the network traffic graph would flatline. It didn’t show anything. Empty. Except the records were all there: if I threw a raw query at Elasticsearch, I could see all the system.network.in.bytes (or out.bytes) values.

The root of the problem: just using those metrics as-is won’t do, since it’s a cumulative sum of all network traffic. You know if you look at your system monitor (or task manager or however your OS calls it) on your desktop, you’ll see something like this:

The metrics in question contain that ever-growing total. What I wanted to see is a graph similar to the above: the actual traffic at any given point in time. That’s the change of the sum between two points. That’s a derivative.

Luckily enough, Grafana supports derivative aggregation out of the box, so no need to set up Elasticsearch pipelines and all that. However, getting it to work as desired is a bit quirky.

The biggest catch is that leaving the Date Histogram’s Interval on “auto” simply didn’t work. As soon as I specified an exact Interval value, the graph popped to life and filled up in a flash. 10 seconds is the shortest available, which is why I’m using it.

The silent disk i/o

The other problematic bit was another i/o metrics, disk usage. This turned out to be a completely different problem from the network above. Unlike network traffic, disk i/o supposedly already has derivative metrics provided in iostat fields like system.diskio.iostat.read.per_sec.bytes.

The problem was that the relevant read/write fields were empty. Querying Elasticsearch directly I could see that the fields were simply not filled up, which indicated that this was a problem with Metricbeat itself, not some Grafana setting.

It was weird because I’d swear it worked when I set up the same system locally on Docker, so there was no reason it wouldn’t work with the same setup on an actual server. Except it didn’t. I double checked and Metricbeat had the System module enabled: just check if the modules.d/system.yml file is called that and doesn’t have .disabled attached to it. If it is disabled, metricbeat modules enable system fixes that.

The empty metrics problem I faced was actually caused by the contents of said system.yml: the diskio line was commented out, disabling that metricset.

Un-commenting that line (and then reloading Metricbeat) was all it took. But it made me wonder why it might be turned off. The sample config file has it turned off too, but there are no details on why. I noticed no change in CPU usage so it shouldn’t be a resource problem either. Mystery.