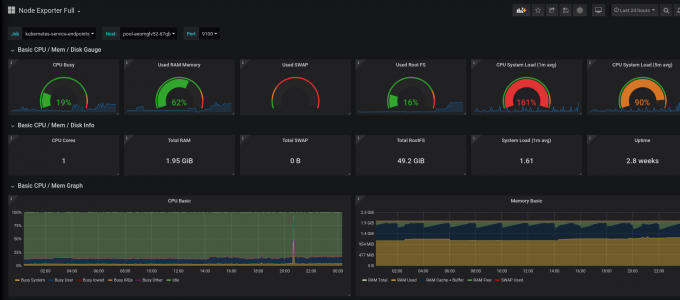

Symptoms: CPU load on all the nodes, but not the pods. Looking at Grafana, I noticed that CPU load on some of my nodes was constantly very high. At the same time, even the total CPU use of all the pods summed wasn’t above 0.4. What gives? This usually gives that the control plane is getting fried by something. It may be trying to relieve disk pressure, or in this case, trying to revive CSI.

Trying to figure out what was causing problems I checked the pods in kube-system with kubectl get pods -n kube-system. It quickly became apparent that there is a problem: disk-related pods like csi-resizer, csi-snapsotter and csi-provisioner were in CrashLoopBackOff.

I’ll be quite honest in that I’m not sure what the problem was. A few searches later I came to the conclusion that an earlier node reboot had left the pods with a corrupted DNS cache or something along those lines. Basically every issue I found with the symptoms I was seeing came down to DNS problems (longhorn/longhorn#2225, longhorn/longhorn#3109, rancher/k3os#811).

Alas I haven’t touched any of the networking machinery of Kubernetes (nor configured any of it for k3s) so my first idea was just the good old one from IT Crowd: “have you tried turning it off and on again?” So I did. Luckily another restart of the afflicted nodes solved the issue. I’m glad it did because I dread what I’d have had to do otherwise.