I ran into a really weird problem today. I noticed some strange config drift on one of my nodes (shit happens when I manually experiment in “production”), so I decided to reinstall/upgrade the k3s agent. For a while now I’ve been connecting my nodes through tailscale, so that I can have my homelab machine join the cluster from my home network as well. k3s has a(n experimental) feature for “natively” integrating with tailscale and it’s been working just fine so far.

Fixed my TLS

Usually there was no problem. Stuff worked just fine. Certificates were generated and renewed automatically. https:// links opened without ugly browser warnings about how you’re about to get hacked and it’s the end of the known universe.

But when it wasn’t “usually”, when Traefik just happened to restart for whatever reason, then all of that was obliterated. Since Traefik was running on ephemeral storage, eg nothing was really persisted, innocently tweaking some configuration (that resulted in a restart) could be catastrophic. You know, self-signed certificates and ugly browser warnings.

Upgrading the argo-cd Helm chart from 5.x to 8.x

I’ve been using Argo CD for GitOps automation for a very long time. I have it manage itself too! The other day I noticed that there was a new major version of Argo CD, so I decided to upgrade my stuff too. It did not go smoothly, though it wasn’t an issue with Argo CD itself.

It was the usual problem of Helm charts renaming and moving around stuff in their values.yaml, which results in significant breakage for (from an user perspective) no good reason. I summed up what I learned so others don’t have to play around with it so much.

The mythical modular monolith

So often I see people giving talks about how the microservice architecture is a failure. You end up losing transactional protections, you’ll have to “join” data across a network boundary, and that network boundary is “always” flaky. Wouldn’t it be much better if everything was in one process, where you could enjoy the benefits of transactions, neighboring data is just a method call away and the only network you have to worry about is the database connection?

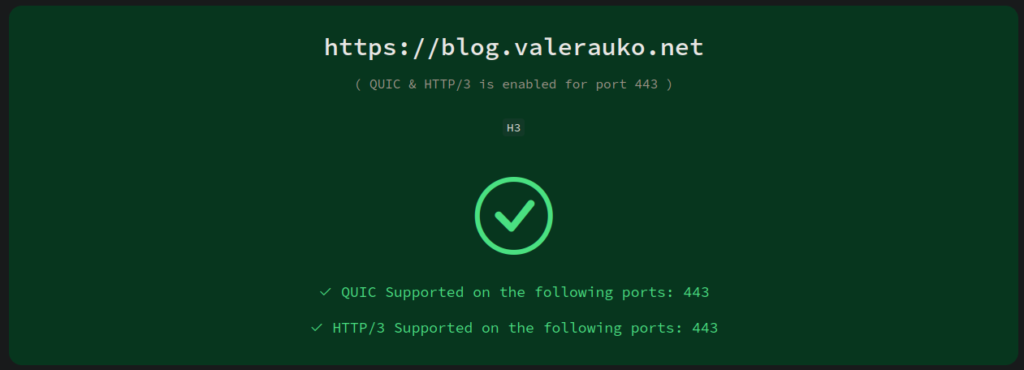

Why isn’t my HTTP/3 working?

On a sudden impulse, today I updated the Traefik in my cluster to v3. Unlike the transition from v1 to v2, v2 to v3 was very smooth. I only needed a few tiny tweaks to the configuration. One of those was that HTTP/3 support is no longer “experimental” and so it shouldn’t be configured as such. This started me on a weird quest on getting my sites to use H3.

Longhorn trash weighing me down

Last year I gave Longhorn a try. It was a nice proposition for me: I was using local storage anyway, so the idea that pods would be independent of the nodes sounded delicious. Except the price was way too much.

At the time I thought that the only problem was Prometheus, which in itself is pretty heavy on disk IO, but it turns out the issue was with Longhorn itself. Also, as I found out, I wasn’t thorough enough deleting Longhorn stuff, which resulted in quite a few headaches this year.

Setting up additional container runtimes

The other day I noticed a post on one of my Misskey Antennas about “a container runtime written in Rust” called youki. This piqued my interest especially since its repository is under the containers org, which is also the home of Podman and crun for example. I’ll be honest and admit that I’m still not very clear about the exact responsibilities of such a runtime. It gets especially fuzzy when both “high level” runtimes like containerd and docker, and low level runtimes (that can serve as the “backend” of high level ones) like kata and youki are referred to just as “runtimes.” Anyway I decided to give it a try and see if I could get it to work with my k3s setup. It really wasn’t as easy as I’d hoped.

Adding Grafana annotations based on Flux CD events

Earlier this year I wrote about adding Grafana annotations based on Argo CD events. Since this year I actually got around testing out Flux some more, it was natural to follow up with adding some Grafana annotations based on Flux events.

The Weave GitOps UI for Flux

Unlike Argo, Flux has no built-in UI. CD tools should be invisible most of the time, just quietly running in the back, getting changes in the cluster. This is fine for “most of the time” when there are no changes to the applications I’m making myself or the cluster itself. It can just look at the various helm charts and other (git) sources and deploying the changes as configured. It’s nice that I can be sure that my cluster has the latest possible versions of everything running within 10-15 minutes of release (depending on the Flux interval configured) without ever touching kubectl.

Trying FluxCD

When you create a whole FluxCD new setup from zero, it’s really easy: use flux bootstrap. it does “everything” for you. In my case I tried this setup first for last year’s advent calendar. Except back then I had an expedition scheduled for the first half of December, so this took the back seat and was eventually forgotten. Therefore in this year past everything I did back then slowly sank into oblivion, so I again had to start pretty much from zero.

I did set up the repo and a cluster (on Civo) back then, but I quickly tore down the cluster when I realized I wouldn’t be able to test it all out. The repo stayed though, so now i was starting afresh with a k3s cluster spanning three nanodes (that’s a linode referral link with a $100 credit over 60 days) and a repository already set up from last year.

-

Recent Posts

Tags

ale anime art beer blog clojure code coffee deutsch emo english fansub fest filozófia food gaming gastrovale geek hegymász jlc kaja kubernetes kultúra language literature live magyar movie másnap politika rant sport suli szolgálati közlemény travel társadalom ubuntu university weather work zene 日本 日本語 百名山 軽音-

Recent Posts

Tags

ale anime art beer blog clojure code coffee deutsch emo english fansub fest filozófia food gaming gastrovale geek hegymász jlc kaja kubernetes kultúra language literature live magyar movie másnap politika rant sport suli szolgálati közlemény travel társadalom ubuntu university weather work zene 日本 日本語 百名山 軽音 七大陸最高峰チャレンジ

七大陸最高峰チャレンジ